Social Media

Twitter says it will now ask everyone for feedback about its policy changes, starting today

Twitter says it’s going to change the way it creates rules regarding the use of its service to also now include community feedback. Previously, the company followed its own policy development process, including taking input from its Trust and Safety Council and various experts. Now, it says it’s going to try something new: it’s going to ask its users.

According to announcement published this morning, Twitter says it will ask everyone for feedback on a new policy before it becomes a part of Twitter’s official Rules.

It’s kicking off this change by asking for feedback on its new policy around dehumanizing language on Twitter, it says.

Over the past three months, Twitter has been working to create a policy that addresses language that “makes someone feel less than human” – something that can have real-world repercussions, including “normalizing serious violence,” the company explains.

To some extent, dehumanizing language is covered under Twitter’s existing hateful conduct policy, which addresses hate speech that includes the promotion of violence, or direct attacks or threats against people based on factors like their race, ethnicity, national origin, sexual orientation, gender, gender identity, religious affiliation, age, disability, or serious disease.

However, there are still ways to be abusive on Twitter outside of those guidelines, and dehumanizing language is one of them.

The new policy is meant to expand the hateful conduct policy to also prohibit language that dehumanizes others based on ” their membership in an identifiable group, even when the material does not include a direct target,” says Twitter.

The company isn’t soliciting user feedback over email or Twitter, however.

Instead, it has launched a survey.

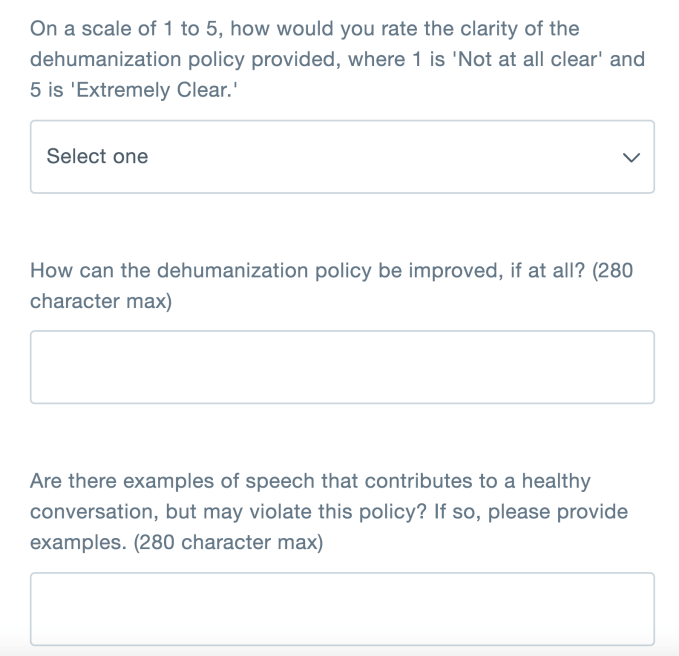

Available until October 9 at 6:00 AM PT, the survey asks only a few questions after presenting the new policy’s language for you to read through.

For example, it asks users to rate the clarity of the policy itself on a scale of one to five. It then gives you 280 characters max – just like on Twitter – to suggest how the policy could be improved. Similarly, you have 280 characters to offer examples of speech that contribute to a healthy conversation, but may violate this policy – Twitter’s attempt at finding any loopholes or exceptions.

And it gives you another 280 characters to offer additional feedback or thoughts.

You also have to provide your age, gender, (optionally) your username, and say if you’re willing to receive an email follow-up if Twitter has more questions about your responses.

Twitter doesn’t say how much community feedback will guide its decision-making, though. It simply says that after the feedback, it will then continue with its regular process, which passes the policy through a cross-functional working group, including members of its policy development, user research, engineering, and enforcement teams.

The idea to involve the community in policy-making is a notable change, and one that could make people feel more involved with the definition of the rules, and therefore – perhaps! – more likely to respect them.

But Twitter’s issues around abuse and hate speech on its network don’t really stem from poor policies – its policies actually spell things out fairly well, in many cases, about what should be allowed and what should not.

Twitter’s problems tend to stem from lax enforcement. The company has far too often declined to penalize or ban users whose content is clearly hateful in its nature, in an effort to remain an open platform for “all voices” – including those with extreme ideologies. Case in point: it was effectively the last of the large social platforms to ban the abusive content posted by Alex Jones and his website Infowars.

Users also regularly complain that they have been subject to tweets that violate Twitter guidelines and rules, but no action is taken.

It’s interesting, at times, to consider how differently Twitter could have evolved if community moderation – similar to the moderation on Reddit or even the moderation that takes place on open source Twitter clone Mastodon – had been a part of Twitter’s service from day one. Or how things would look if marginalized groups and those who are often victims of harassment and hate speech had been involved directly with building the platform in the early days. Would Twitter be a different place?

But that’s not where we are.

The new dehumanization policy Twitter is asking about is below:

Twitter’s Dehumanization Policy

You may not dehumanize anyone based on membership in an identifiable group, as this speech can lead to offline harm.

Definitions:

Dehumanization: Language that treats others as less than human. Dehumanization can occur when others are denied of human qualities (animalistic dehumanization) or when others are denied of human nature (mechanistic dehumanization). Examples can include comparing groups to animals and viruses (animalistic), or reducing groups to their genitalia (mechanistic).

Identifiable group: Any group of people that can be distinguished by their shared characteristics such as their race, ethnicity, national origin, sexual orientation, gender, gender identity, religious affiliation, age, disability, serious disease, occupation, political beliefs, location, or social practices.

-

Entertainment7 days ago

Entertainment7 days agoI went to the ‘Severance’ pop-up in Grand Central Station. It was wild.

-

Entertainment6 days ago

Entertainment6 days agoWhat’s new to streaming this week? (Jan. 17, 2025)

-

Entertainment6 days ago

Entertainment6 days agoExplainer: Age-verification bills for porn and social media

-

Entertainment5 days ago

Entertainment5 days agoIf TikTok is banned in the U.S., this is what it will look like for everyone else

-

Entertainment5 days ago

Entertainment5 days ago‘Night Call’ review: A bad day on the job makes for a superb action movie

-

Entertainment5 days ago

Entertainment5 days agoHow ‘Grand Theft Hamlet’ evolved from lockdown escape to Shakespearean success

-

Entertainment5 days ago

Entertainment5 days ago‘September 5’ review: a blinkered, noncommittal thriller about an Olympic hostage crisis

-

Entertainment5 days ago

Entertainment5 days ago‘Back in Action’ review: Cameron Diaz and Jamie Foxx team up for Gen X action-comedy