Social Media

Facebook rolls out new tools for Group admins, including automated moderation aids

Facebook today introduced a new set of tools aimed at helping Facebook Group administrators get a better handle on their online communities and, potentially, help keep conversations from going off the rails. Among the more interesting new tools is a machine-learning-powered feature that alerts admins to potentially unhealthy conversations taking place in their group. Another lets the admin slow down the pace of a heated conversation, by limiting how often group members can post.

Facebook Groups are today a significant reason why people continue to use the social network. Today, there are “tens of millions” of groups, that are managed by over 70 million active admins and moderators worldwide, Facebook says.

The company for years has been working to roll out better tools for these group owners, who often get overwhelmed by the administrative responsibilities that come with running an online community at scale. As a result, many admins give up the job and leave groups to run somewhat unmanaged — thus allowing them to turn into breeding grounds for misinformation, spam and abuse.

Facebook last fall tried to address this problem by rolling out new group policies to crack down on groups without an active admin, among other things. Of course, the company’s preference would be to keep groups running and growing by making them easier to operate.

That’s where today’s new set of features come in.

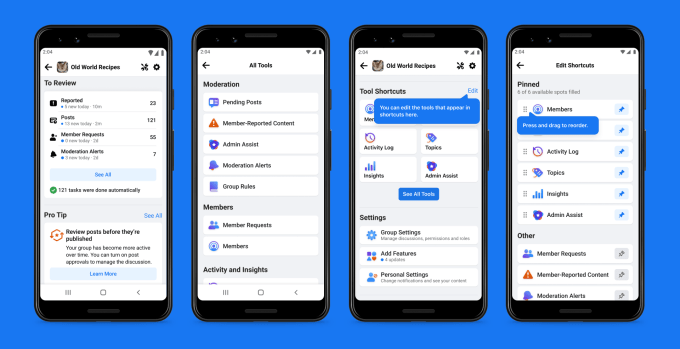

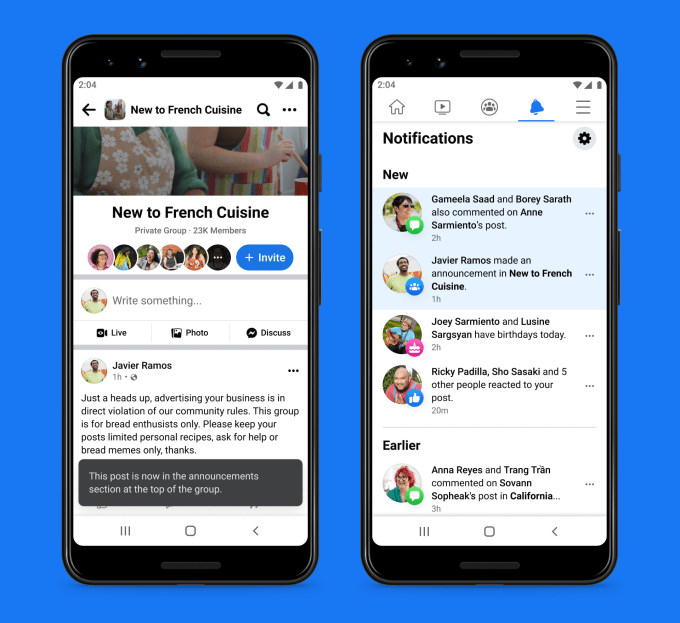

A new dashboard called Admin Home will centralize admin tools, settings and features in one place, as well as present “pro tips” that suggest other helpful tools tailored to the group’s needs.

Image Credits: Facebook

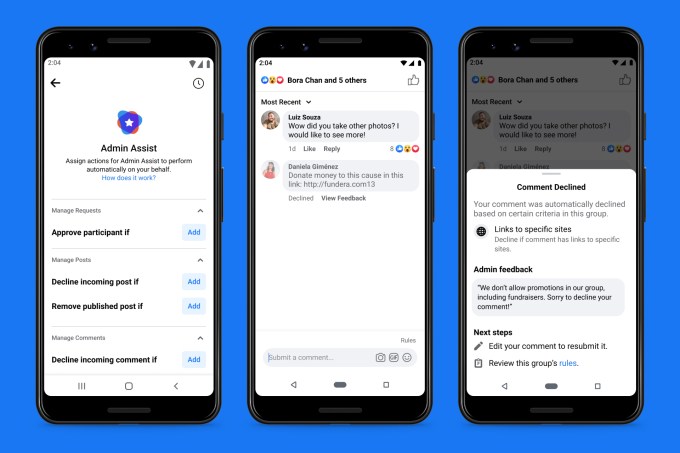

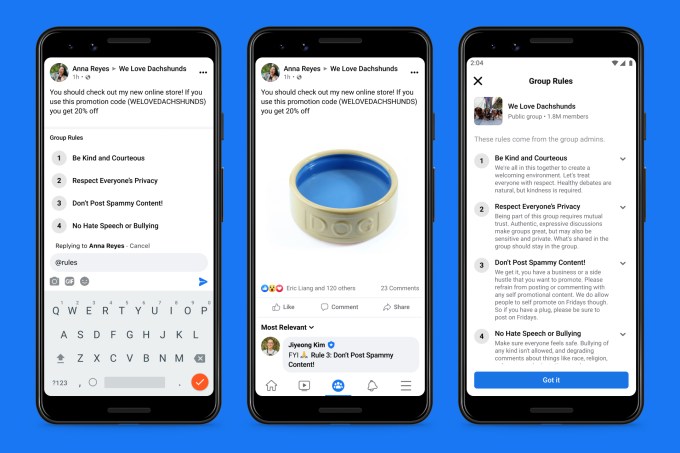

Another new Admin Assist feature will allow admins to automatically moderate comments in their groups by setting up criteria that can restrict comments and posts more proactively, instead of forcing admins to go back after the fact and delete them, which can be problematic — especially after a discussion has been underway and members are invested in the conversation.

For example, admins can now restrict people from posting if they haven’t had a Facebook account for very long or if they had recently violated the group’s rules. Admins can also automatically decline posts that contain specific promotional content (perhaps MLM links! Hooray!) and then share feedback with the author of the post automatically about why those posts aren’t allowed.

Admins can also take advantage of suggested preset criteria from Facebook to help with limiting spam and managing conflict.

Image Credits: Facebook

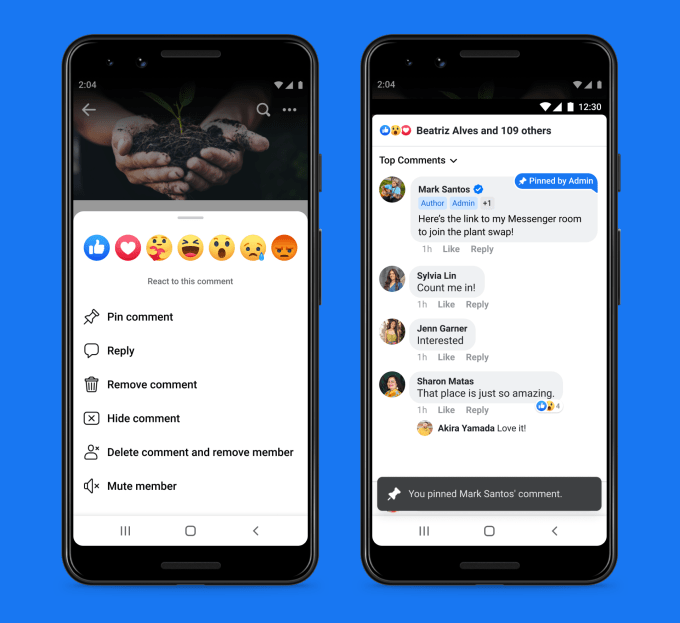

One notable update is a new moderation alert type dubbed “conflict alerts.” This feature, currently in testing, will notify admins when a potentially contentious or unhealthy conversation is taking place in the group, Facebook says. This would allow an admin to quickly take an action — like turning off comments, limiting who could comment, removing a post, or however else they would want to approach the situation.

Conflict alerts are powered by machine learning, Facebook explains. Its machine-learning model looks at multiple signals, including reply time and comment volume to determine if engagement between users has or might lead to negative interactions, the company says.

This is sort of like an automated expansion on the Keyword Alerts feature many admins already use to look for certain topics that lead to contentious conversations.

Image Credits: Facebook

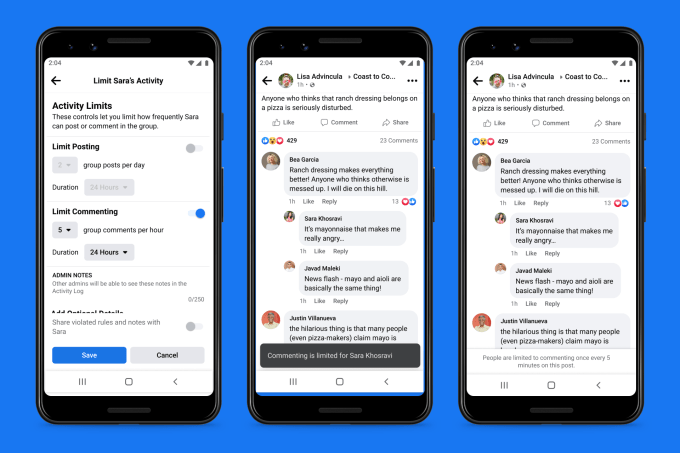

A related feature, also new, would allow admins to also limit how often specific members could comment, or how often comments could be added to posts admins select.

When enabled, members can leave one comment every five minutes. The idea here is that forcing users to pause and consider their words amid a heated debate could lead to more civilized conversations. We’ve seen this concept enacted on other social networks, as well — such as with Twitter’s nudges to read articles before retweeting, or those that flag potentially harmful replies, giving you a chance to reedit your post.

Image Credits: Facebook

Facebook, however, has largely embraced engagement on its platform, even when it’s not leading to positive interactions or experiences. Though small, this particular feature is an admission that building a healthy online community means sometimes people shouldn’t be able to immediately react and comment with whatever thought first popped into their head.

Additionally, Facebook is testing tools that allow admins to temporarily limit activity from certain group members.

If used, admins will be able to determine how many posts (between one and nine posts) per day a given member may share, and for how long that limit should be in effect for (every 12 hours, 24 hours, 3 days, 7 days, 14 days or 28 days). Admins will also be able to determine how many comments (between one and 30 comments, in five-comment increments) per hour a given member may share, and for how long that limit should be in effect (also every 12 hours, 24 hours, 3 days, 7 days, 14 days or 28 days).

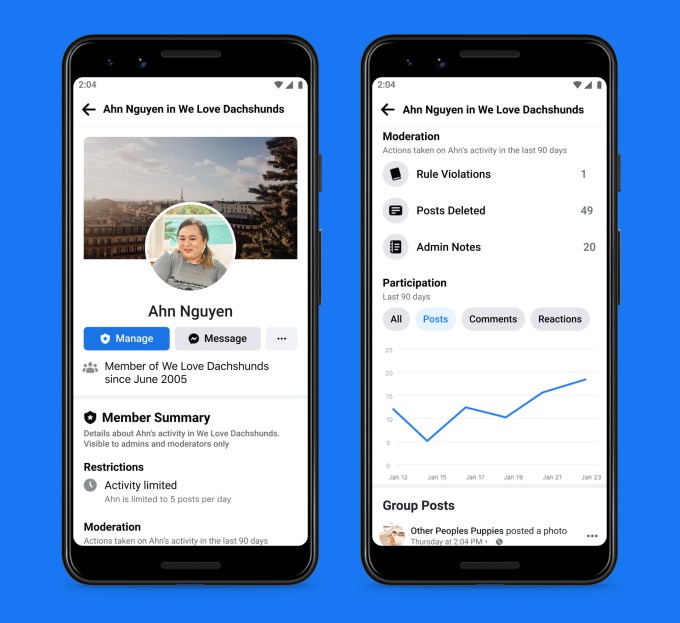

Along these same lines of building healthier communities, a new member summary feature will give admins an overview of each member’s activity on their group, allowing them to see how many times they’ve posted and commented, have had posts removed or have been muted.

Image Credits: Facebook

Facebook doesn’t say how admins are to use this new tool, but one could imagine admins taking advantage of the detailed summary to do the occasional cleanup of their member base by removing bad actors who continually disrupt discussions. They could also use it to locate and elevate regular contributors without violations to moderator roles, perhaps.

Admins will also be able to tag their group rules in comment sections, disallow certain post types (e.g., Polls or Events), and submit an appeal to Facebook to re-review decisions related to group violations, if in error.

Image Credits: Facebook

Of particular interest, though a bit buried amid the slew of other news, is the return of Chats, which was previously announced.

Facebook had abruptly removed Chat functionality back in 2019, possibly due to spam, some had speculated. (Facebook said it was product infrastructure.) As before, Chats can have up to 250 people, including active members and those who opted into notifications from the chats. Once this limit is reached, other members will not be able to engage with that specific chat room until existing active participants either leave the chat or opt out of notifications.

Now, Facebook group members can start, find and engage in Chats with others within Facebook Groups instead of using Messenger. Admins and moderators can also have their own chats.

Notably, this change follows on the heels of growth from messaging-based social networks, like IRL, a new unicorn (due to its $1.17 billion valuation), as well as the growth seen by other messaging apps, like Telegram, Signal and other alternative social networks.

Image Credits: Facebook

Along with this large set of new features, Facebook also made changes to some existing features, based on feedback from admins.

It’s now testing pinned comments and introduced a new “admin announcement” post type that notifies group members of the important news (if notifications are being received for that group).

Plus, admins will be able to share feedback when they decline group members.

Image Credits: Facebook

The changes are rolling out across Facebook Groups globally in the coming weeks.

-

Entertainment6 days ago

Entertainment6 days agoWhat’s new to streaming this week? (Jan. 17, 2025)

-

Entertainment6 days ago

Entertainment6 days agoExplainer: Age-verification bills for porn and social media

-

Entertainment5 days ago

Entertainment5 days agoIf TikTok is banned in the U.S., this is what it will look like for everyone else

-

Entertainment5 days ago

Entertainment5 days ago‘Night Call’ review: A bad day on the job makes for a superb action movie

-

Entertainment5 days ago

Entertainment5 days agoHow ‘Grand Theft Hamlet’ evolved from lockdown escape to Shakespearean success

-

Entertainment5 days ago

Entertainment5 days ago‘September 5’ review: a blinkered, noncommittal thriller about an Olympic hostage crisis

-

Entertainment5 days ago

Entertainment5 days ago‘Back in Action’ review: Cameron Diaz and Jamie Foxx team up for Gen X action-comedy

-

Entertainment5 days ago

Entertainment5 days ago‘One of Them Days’ review: Keke Palmer and SZA are friendship goals