Politics

Facebook tests News Feed controls that let people see less from groups and pages

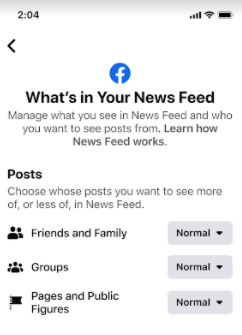

Facebook announced Thursday that it’s running a test to give users a sliver more control over what they see on the platform.

Image Credits: Facebook

The test will go live on Facebook’s app for English-speaking users. It adds three sub-menus into Facebook’s menu for managing what shows up in the News Feed: friends and family, groups and pages and public figures. Users in the test can choose to keep the ratio of those posts in their feed at “normal” or change it to more or less, depending on their preferences.

Anyone in the test can do the same for topics, designating things they are interested in or stuff they’d rather not see. In a blog post, Facebook says the test will affect “a small percentage of people” around the world before the test expands gradually in the next few weeks.

Facebook will also be expanding a tool that allows advertisers to exclude their content from certain topic domains, letting brands opt out of appearing next to “news and politics,” “social issues” and “crime and tragedy. “When an advertiser selects one or more topics, their ad will not be delivered to people recently engaging with those topics in their News Feed,” the company wrote in a blog post.

Facebook’s algorithms are notorious for promoting inflammatory content and dangerous misinformation. Given that, Facebook— and its newly-named parent company Meta — are under mounting regulatory pressure to clean up the platform and make its practices more transparent. As Congress mulls solutions that could give users more control over what they see and tear down some of the opacity around algorithmic content, Facebook is likely holding out hope that there’s still time left to self-regulate.

Last month before Congress, Facebook whistleblower Frances Haugen called attention to the ways that Facebook’s opaque algorithms can prove dangerous, particularly in countries beyond the company’s most scrutinized markets.

Even within the U.S. and Europe, the company’s decision to prioritize engagement in its News Feed ranking systems enabled divisive content and politically inflammatory posts to soar.

“One of the consequences of how Facebook is picking out that content today is that it’s optimizing for content that gets engagement, or reaction,” Haugen said on “60 Minutes” last month. “But its own research is showing that content that is hateful, that is divisive, that is polarizing — it’s easier to inspire people to anger than it is to other emotions.”

-

Entertainment7 days ago

Entertainment7 days agoCES 2025 highlights: 12 new gadgets you can buy already

-

Entertainment6 days ago

Entertainment6 days ago‘American Primeval’ review: Can Netflix’s grimy Western mini-series greatest ‘Yellowstone’?

-

Entertainment5 days ago

Entertainment5 days agoTesla launched the new Model Y in China. Here’s what you need to know

-

Entertainment6 days ago

Entertainment6 days agoWhat’s new to streaming this week? (Jan. 10, 2025)

-

Entertainment5 days ago

Entertainment5 days agoSpace calendar 2025: Here are the moments you won’t want to miss

-

Entertainment4 days ago

Entertainment4 days agoEight ways Mark Zuckerberg changed Meta ahead of Trump’s inauguration

-

Entertainment4 days ago

Entertainment4 days agoCES 2025: Hands on with the airy Asus Zenbook A14

-

Entertainment3 days ago

Entertainment3 days agoHow to donate to LA fire victims, and avoid falling for scams