Business

Amazon SageMaker HyperPod makes it easier to train and fine-tune LLMs

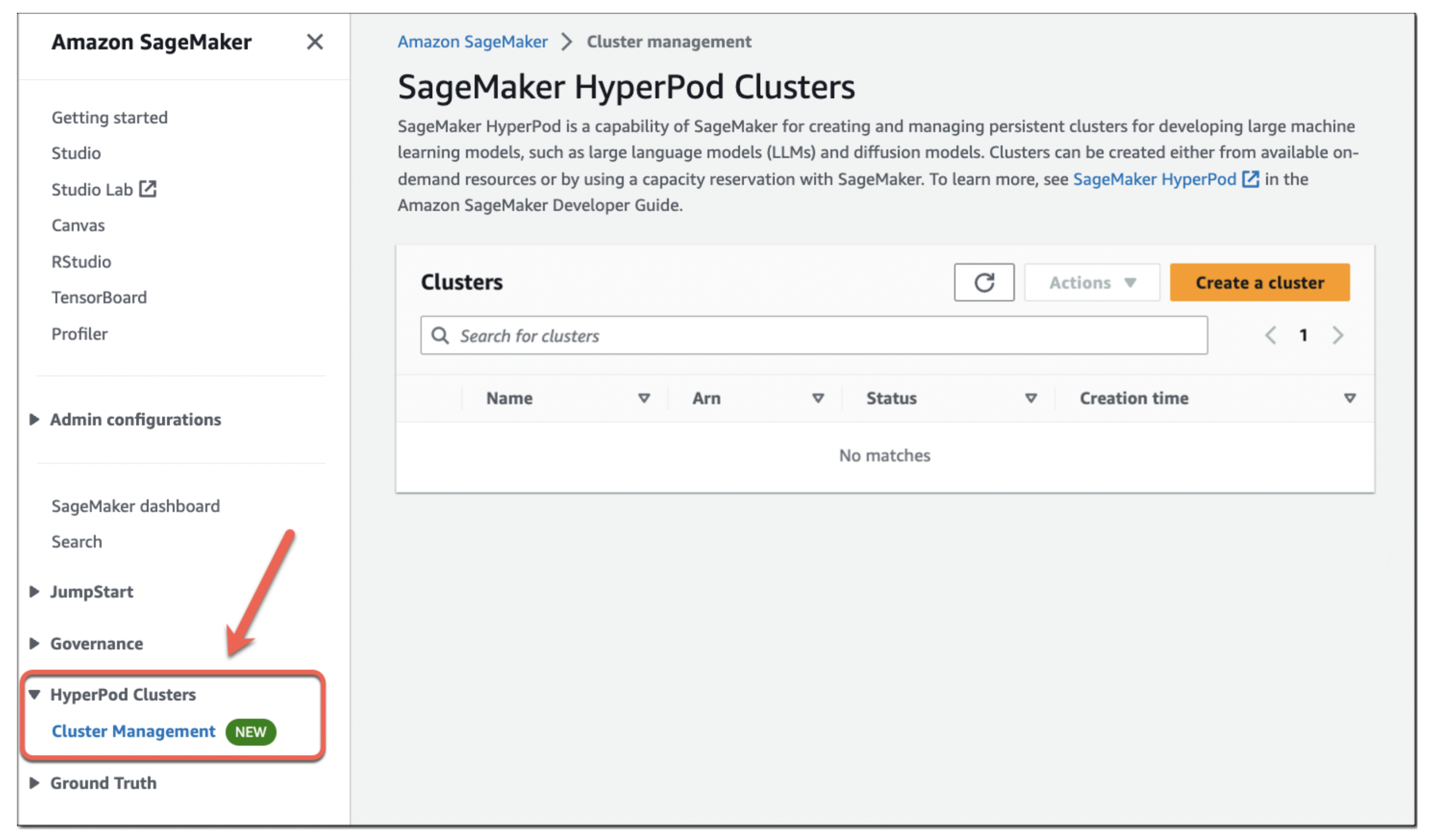

At its re:Invent conference, Amazon’s AWS cloud arm today announced the launch of SageMaker HyperPod, a new purpose-built service for training and fine-tuning large language models. SageMaker HyperPod is now generally available.

Amazon has long bet on SageMaker, its service for building, training and deploying machine learning models, as the backbone of its machine learning strategy. Now, with the advent of generative AI, it’s maybe no surprise that it is also leaning on SageMaker as the core product to make it easier for its users to train and fine-tune large language models (LLMs).

“SageMaker HyperPod gives you the ability to create a distributed cluster with accelerated instances that’s optimized for disputed training,” Ankur Mehrotra, AWS’ general manager for SageMaker, told me in an interview ahead of today’s announcement. “It gives you the tools to efficiently distribute models and data across your cluster — and that speeds up your training process.”

He also noted that SageMaker HyperPod allows users to frequently save checkpoints, allowing them to pause, analyze and optimize the training process without having to start over. The service also includes a number of fail-safes so that when a GPUs goes down for some reason, the entire training process doesn’t fail, too.

“For an ML team, for instance, that’s just interested in training the model — for them, it becomes like a zero-touch experience and the cluster becomes sort of a self-healing cluster in some sense,” Mehrotra explained. “Overall, these capabilities can help you train foundation models up to 40 percent faster, which, if you think about the cost and the time to market, is a huge differentiator.”

Users can opt to train on Amazon’s own custom Trainium (and now Trainium 2) chips or Nvidia-based GPU instances, including those using the H100 processor. The company promises that HyperPod can speed up the training process by up to 40%.

The company already has some experience with this using SageMaker for building LLMs. The Falcon 180B model, for example, was trained on SageMaker, using a cluster of thousands of A100 GPUs. Mehrotra noted that AWS was able to take what it learned from that and its previous experience with scaling SageMaker to build HyperPod.

Perplexity AI’s co-founder and CEO Aravind Srinivas told me that his company got early access to the service during its private beta. He noted that his team was initially skeptical about using AWS for training and fine-tuning its models.

“We did not work with AWS before,” he said. “There was a myth — it’s a myth, it’s not a fact — that AWS does not have great infrastructure for large model training and obviously we didn’t have time to do due diligence, so we believed it.” The team got connected with AWS, though, and the engineers there asked them to test the service out (for free). he also noted that he has found it easy to get support from AWS — and access to enough GPUs for Perplexity’s use case. It obviously helped that the team was already familiar with doing inference on AWS.

Srinivas also stressed that the AWS HyperPod team focused strongly on speeding up the interconnects that link Nvidia’s graphics cards. “They went and optimized the primitives — Nvidia’s various primitives — that allow you to communicate these gradients and parameters across different nodes,” he explained.

-

Entertainment7 days ago

Entertainment7 days agoI went to the ‘Severance’ pop-up in Grand Central Station. It was wild.

-

Entertainment6 days ago

Entertainment6 days agoWhat’s new to streaming this week? (Jan. 17, 2025)

-

Entertainment6 days ago

Entertainment6 days agoExplainer: Age-verification bills for porn and social media

-

Entertainment5 days ago

Entertainment5 days agoIf TikTok is banned in the U.S., this is what it will look like for everyone else

-

Entertainment5 days ago

Entertainment5 days ago‘Night Call’ review: A bad day on the job makes for a superb action movie

-

Entertainment5 days ago

Entertainment5 days agoHow ‘Grand Theft Hamlet’ evolved from lockdown escape to Shakespearean success

-

Entertainment5 days ago

Entertainment5 days ago‘September 5’ review: a blinkered, noncommittal thriller about an Olympic hostage crisis

-

Entertainment5 days ago

Entertainment5 days ago‘Back in Action’ review: Cameron Diaz and Jamie Foxx team up for Gen X action-comedy