Business

How Crisis Text Line’s former for-profit arm went from ’empathy training’ to customer service software

When Crisis Text Line gave its then-CEO permission to start a for-profit company called Loris AI employing the suicide chatline’s user data, the company’s mission was twofold: Make money to support CTL, and do good. It’s now clear that, over the past four years, these intertwined goals got warped along the way.

Today, Loris AI is a customer service company that provides machine learning-powered software that analyzes real-time chats and suggests language for responses. But when former CEO Nancy Lublin pitched the idea to the board and the press of a CTL-spinoff company that would make use of the suicide chatline’s communications expertise and learnings from a trove of data from CTL conversations, the vision was different. Loris’ original purpose was to provide communications coaching to businesses for their employees to teach empathy and the ability to navigate difficult conversations. Customer service training was one possible application, but Lublin also previously described the business as a way to empower marginalized voices.

That lofty business model was short-lived. Over the course of the company’s first year, Loris AI pivoted from creating empathy training videos and software to selling customer service software.

Lublin, who is no longer affiliated with Loris or CTL, was not able to provide a comment at Mashable’s request. Loris responded to Mashable’s questions via emailed answers from a company spokesperson. The company says that it shifted from corporate trainings to customer service because of a need to scale, and maintains that its business today is aligned with its original mission.

“It’s the same concept of empathetic conversational training, which evolved over time in terms of the medium we used,” the spokeswoman said.

But empathy is a complex emotion and skill. Communication among colleagues might require some of the same ingredients of empathy as conversations with customer service agents. But experts are skeptical that a mental health-aligned mission of “empathetic conversational training” really applies in a customer service context.

“Empathy is such a buzzy term, that I think it’s used in so many ways that it can lose its meaning,” Jamil Zaki, a Stanford University psychology professor who has published a book about empathy, said. “We need to ask ourselves, where is empathy in their goal set? I mean, it sounds a little bit grandiose to say our mission is to build empathy, when what you’re doing is just making customer service more efficient.”

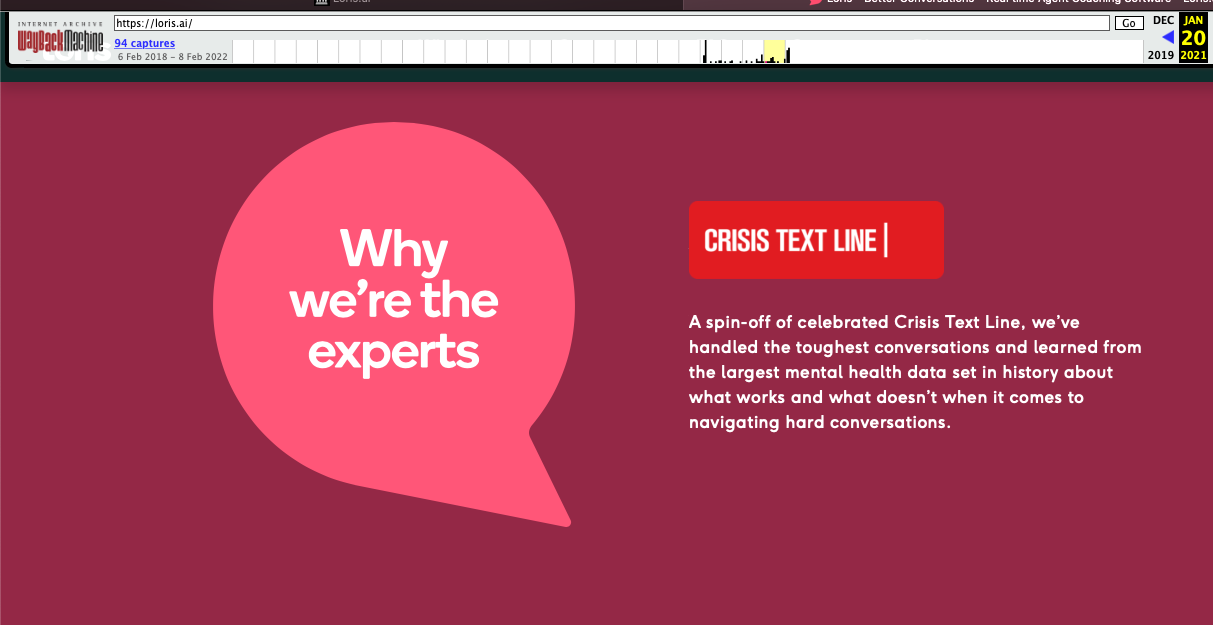

Pivots for startups are common. But not all nascent tech companies get their start with an infusion of valuable data and marketing buzz from a non-profit that helps people in crisis or contemplating suicide — an association the company continued to advertise as part of its edge years later. Only this month, after CTL ended its relationship with Loris following public outrage about the data sharing prompted by a Politico article, and a request from the FCC to cease the arrangement, did the company remove marketing from the website touting its relationship with the chatline.

A Wayback Machine screenshot from January 2021 shows Loris advertised Crisis Text Line’s expertise in ‘navigating hard conversations’ to bolster its own credibility.

Credit: Screenshot: Wayback Machine

“One should never compromise the mission and the original purpose of the business to make an extra buck,” said Mac McCabe, a strategic advisor to impact-focused companies and long-time member of what is now the American Sustainable Business Network, which helps bring social good to the private sector.

Lublin used the idea of empathy to sell her board and the public on a for-profit use of people’s conversations about suicide. But ultimately, that’s all the vision amounted to: A sales technique.

‘Mission Aligned’

Crisis Text Line board member Danah Boyd describes the late 2010s for CTL in a blog post as a time when the organization was both strapped for cash, and receiving requests from researchers, insurers, and other entities, to access CTL’s data from its chat logs. Boyd says she and the board were highly selective about the researchers who they gave (anonymized) data to, and did not want to sell data, ever.

But Lublin presented a business idea that seemed to answer both the need to make money, and do some good. Based on feedback from CTL volunteers that its communications training helped them in other areas of their life, the opportunity Lublin pitched was corporate empathy training. Boyd writes that the controlled nature of the data sharing felt ethical, since the for-profit entity would only get anonymized batches of data, not continuous access. But, crucially, the Loris vision felt “mission aligned,” she said.

Boyd writes that while some members voted in favor of Loris’ creation for the financial upside, she voted in favor for “a different reason.”

“If another entity could train more people to develop the skills our crisis counselors were developing, perhaps the need for a crisis line would be reduced,” Boyd writes. “If we could build tools that combat the cycles of pain and suffering, we could pay forward what we were learning from those we served. I wanted to help others develop and leverage empathy.”

These were the same advantages Lublin touted to the press, including Mashable, when CTL announced the creation of Loris in February 2018.

“When people avoid hard conversations, think about who loses,” Lublin told Mashable at the time. “It’s super important to us that people learn how to have hard conversations so women, people of color, and people who are marginalized can have a seat at the table.”

Sounds peachy, and like a potentially noble use of learnings from highly sensitive anonymized data, if there ever was any such use. The authenticity of Lublin’s words in Mashable’s article and other outlets’ coverage reads differently now, especially in the wake of Lublin’s 2020 fall from grace and subsequent firing, when she was accused of being a toxic boss and perpetrator of a racially-insensitive workplace.

But the business of corporate empathy training Lublin was selling to her board and the public was not the one she actually eventually helped build.

A word cloud on Loris’ website from 2018.

Credit: Screenshot: Wayback Machine

Two roads diverge

In early 2018, Loris’ business was getting off the ground in the form of creating communications training videos for businesses, but it was just one part of the whole. The website at the time mentions that “the intelligence gleaned from both the Crisis Text Line training and large sentiment-rich data corpus will be leveraged to create Loris.ai enterprise software.” In 2018, Lublin told Mashable that Loris software would train employees how to navigate challenging conversations.

One person who met with Lublin for a recruiting meeting in March 2018 (who wants to remain anonymous to protect their identity for professional reasons) recalls that Lublin was already conceiving of customer service software as a direction for the business.

A 2018 screenshot shows how Loris planned to utilize Crisis Text Line learnings and data for the creation of ‘enterprise software.’

Credit: Screenshot: Wayback Machine

The interviewee recalls being confused about how empathy training and customer service software went together — they seemed like two separate directions for the company. They noticed that there seemed to be tension around the issue of the company’s focus between Lublin and the other Loris employee present at the meeting. Loris refutes the characterization of tension between these two directions.

“There was no tension, and pivots (in our case a pivot away from training videos and towards machine learning and AI) is typical of many early stage startups,” the Loris spokeswoman said.

Even when putting together the team that would build this software, Lublin continued to use Loris’ pro-social mission of helping people have “hard conversations” in her recruiting, according to the interviewee.

“That was part of the pitch,” they said of the empathy and communication mission.

“Part of the why you would want to work with us.”

The pivot

Loris’ software business began to solidify over the next year. Loris declined to comment on the specific timeline because its current CEO, Etie Hertz, did not join the company until 2019. But an ex-employee (who wants to remain anonymous to protect their identity for professional reasons) said that the pivot from making empathy training videos — which was in line with the mission Lublin had pitched to the board — to customer service software occurred less than a year after the company’s founding, in late 2018.

In 2019, the company began working on a Chrome extension that ingested customer service tickets, analyzed the messages (and their emotional overtones), and suggested responses. The software today appears to be a more sophisticated tool with the similar approach of sentiment analysis paired with response suggestions. The aim is to improve customer satisfaction and increase agents’ productivity.

A screenshot of Loris’ website today. Its motto? Scale without sacrifice.

Credit: Screenshot: Loris.ai

It’s easy to see how a Software-as-a-Service business that focuses on one type of interaction — customer service — is easier to grow (and monetize) than a video development and training software platform for people in many roles, communicating about everything from getting a raise to conflict among coworkers.

“It was a realization that real-time AI software could ultimately help us achieve our goal of better conversations at scale, whereas training content would have a far more limited impact,” the Loris spokeswoman said.

The pivot makes sense to Elizabeth Segal, an Arizona State University professor of social work, who researches empathy, because of the complexity of the original mission.

“They may have had completely good intentions, and they discovered how hard it is,” Segal said.

But Loris’ characterization of the pivot today appears to equate empathy training among colleagues with customer support. This conflation is actually common in tech. Empathy has become a corporate buzzword that really means being attuned to a customer’s needs. But is training a boss on how to be a better listener, or coaching an employee on how to stand up for themself, really the same thing as helping a customer understand why they’re not entitled to a refund?

“Empathy does not necessarily include a distinct outcome,” Segal said. “The bottom line is, [a customer service agent] is going to do whatever they can to make sure I stay a customer. And that’s good. That’s what you want them to do for the company. But that doesn’t necessarily have anything to do with empathy.”

The ex-employee, too, felt skeptical about the “mission” aspect of the company, and that the true purpose of the software was the same as every other company: Surveil workers, resolve tickets, and increase productivity. In an early 2020 website redesign captured by the Way Back Machine, Loris’ site advertised its product as “enterprise software that helps boost your empathy AND your bottom line.” The website contains similar language today.

“Their whole spiel about empathy and being more human was just B.S.,” the ex-employee said. “It wasn’t about being more human, it was about being more robotic and tracking everything.”

Loris refutes the “robotic” characterization and explained that language suggestions are meant to improve the outcome of conversations “for everyone involved.”

Wayback Machine archives show the website undergoing a change to focus on customer service in 2020.

Credit: Screenshot: Wayback Machine

It’s possible that Loris’ relationship with Crisis Text Line was actually more about marketing than anything else. The ex-employee said CTL’s data ended up being immaterial to the actual product Loris created. At times, a colleague expressed frustration to the ex-employee when they were asked to use the CTL corpus, because the data just wasn’t useful.

“I cannot remember in the time I was there any instance of, ‘oh, this thing that the data scientists learned from Crisis [Text Line] data was super helpful for Loris,’ because they were two different universes,” the ex-employee said.

Loris confirmed that it did not, in fact, use CTL user data in the creation of its AI software — a strange detail considering the outrage incited by the Politico article over Loris’ access. Loris gives a vague description of how it actually used CTL’s data: Using the same “de-escalation” strategies that were borne from “crisis counselor techniques and language choice” in customer service interactions.

“User language (e.g. those that text into Crisis Text Line) was not utilized in the development of our software,” Loris said.

That did not stop Loris from advertising the data’s role in the business on its website as recently as last January: “A spin-off of celebrated Crisis Text Line, we’ve handled the toughest conversations and learned from the largest mental health data set in history about what works and what doesn’t when it comes to navigating hard conversations.”

If the CTL connection was useful purely for marketing, and customer service was indeed more lucrative than workplace training, perhaps it could be all for the greater good. One of Loris’ main directives was to make money for CTL, after all.

But that did not pan out, either: Loris only came out of beta and launched “officially” in April 2021. Over the four years of its existence, Loris paid CTL about $21,000 in exchange for office space rentals, according to Politico, and had yet to generate a revenue share. Today, Loris says it is “scaling up quickly.”

The highest standards

It’s not clear how involved Crisis Text Line was in Loris’ evolution. CTL declined to comment on this matter, though it confirmed it was in touch with Loris recently to facilitate removing the CTL association from its website.

Boyd’s blog post provides some clarity. When the revelations about Lublin’s racial insensitivity that resulted in her firing came out, CTL went into triage mode. After Loris removed Lublin from its leadership team as well, CTL did not move to instate someone else. It then froze the relationship with Loris, ceased sharing data, and planned to revisit the contract in 2022. Basically, CTL focused on getting its own house in order.

Until late January, when Politico publicized Loris’ existence. The article raised awareness about the data-sharing arrangement, questioned its ethics, and reported CTL volunteers’ calls for reform. Days later, the FCC called for a stop to the data-sharing practice. CTL initially defended its relationship with Loris, but ultimately dissolved the partnership on January 31.

“We will always aim to hold ourselves to the highest standards,” the announcement blog reads.

Boyd’s blog post chronicling Crisis Text Line’s decision to create Loris ends with a series of questions. One reads: “Is there any structure in which lessons learned from a non-profit service provider can be transferred to a for-profit entity?” Hopefully Loris’ tale may help one day answer this question in the affirmative. But for Loris and Crisis Text Line? No, not today.

“The fact that it seems to have been positioning itself as one thing when it was ultimately something else destroys its credibility,” McCabe, the impact-focused companies advisor, said when Loris’ trajectory was described to him. “It sounds unfathomably unethical to me.”

Additional reporting by Rebecca Ruiz.

-

Entertainment6 days ago

Entertainment6 days agoWordPress.org’s login page demands you pledge loyalty to pineapple pizza

-

Entertainment7 days ago

Entertainment7 days agoRules for blocking or going no contact after a breakup

-

Entertainment5 days ago

Entertainment5 days agoOpenAI’s plan to make ChatGPT the ‘everything app’ has never been more clear

-

Entertainment6 days ago

Entertainment6 days ago‘Mufasa: The Lion King’ review: Can Barry Jenkins break the Disney machine?

-

Entertainment4 days ago

Entertainment4 days ago‘The Last Showgirl’ review: Pamela Anderson leads a shattering ensemble as an aging burlesque entertainer

-

Entertainment5 days ago

Entertainment5 days agoHow to watch NFL Christmas Gameday and Beyoncé halftime

-

Entertainment4 days ago

Entertainment4 days agoPolyamorous influencer breakups: What happens when hypervisible relationships end

-

Entertainment3 days ago

Entertainment3 days ago‘The Room Next Door’ review: Tilda Swinton and Julianne Moore are magnificent