Social Media

Facebook releases community standards enforcement report

Facebook has just released its latest community standards enforcement report and the verdict is in: people are awful, and happy to share how awful they are with the world.

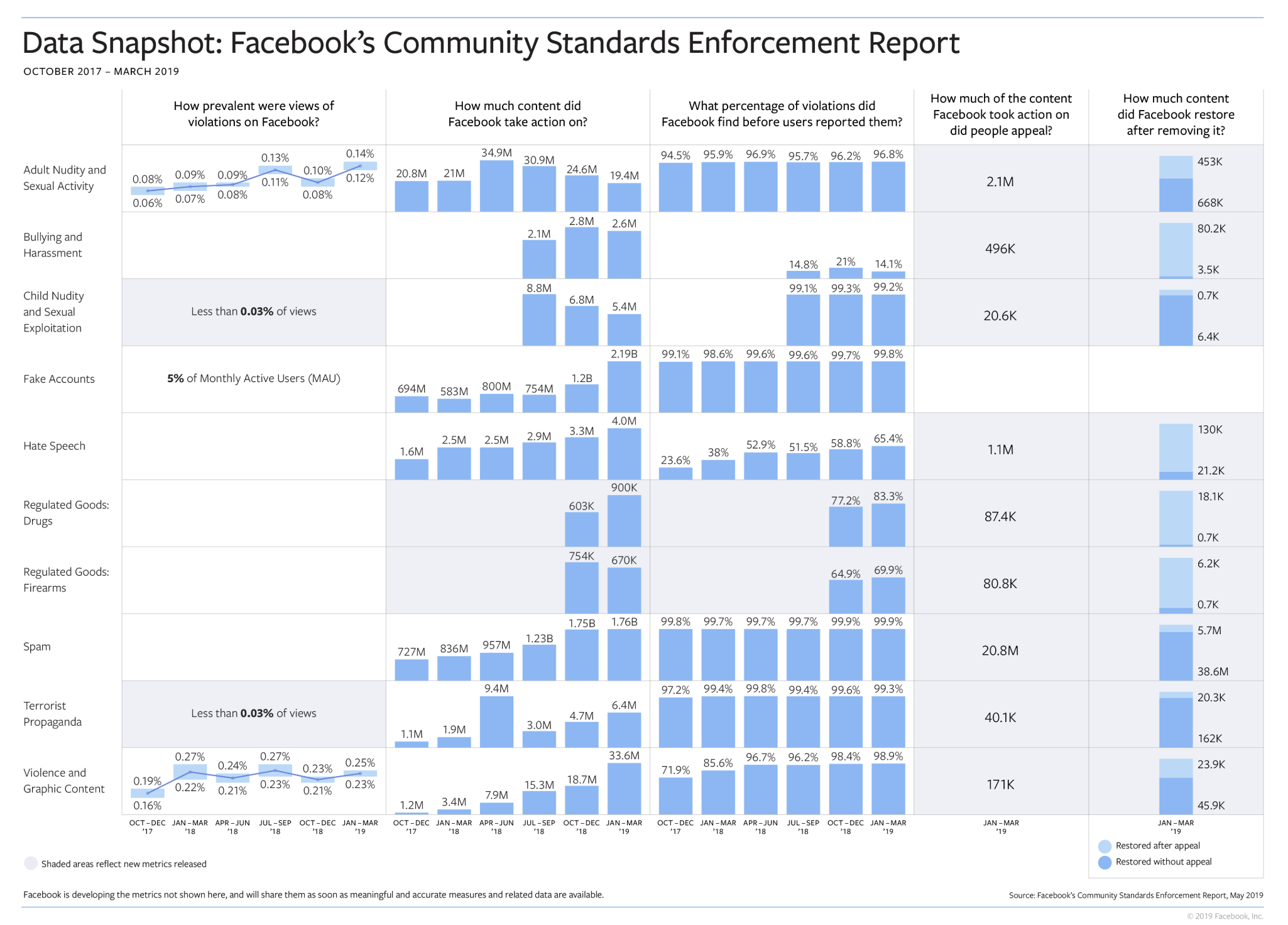

The latest effort at transparency from Facebook on how it enforces its community standards contains several interesting nuggets. While the company’s algorithms and internal moderators have become exceedingly good at tracking myriad violations before they’re reported to the company, hate speech, online bullying, harassment and the nuances of interpersonal awfulness still have the company flummoxed.

In most instances, Facebook is able to enforce its own standards and catches between 90% and over 99% of community standards violations itself. But those numbers are far lower for bullying, where Facebook only caught 14% of the 2.6 million instances of harassment reported; and hate speech, where the company internally flagged 65.4% of the 4.0 million moments of hate speech users reported.

By far the most common violation of community standards — and the one that’s potentially most worrying heading into the 2020 election — is the creation of fake accounts. In the first quarter of the year, Facebook found and removed 2.19 billion fake accounts. That’s a spike of 1 billion fake accounts created in the first quarter of the year.

Spammers also keep trying to leverage Facebook’s social network — and the company took down nearly 1.76 billion instances of spammy content in the first quarter.

For a real window into the true awfulness that people can achieve, there are the company’s self-reported statistics around removing child pornography and graphic violence. The company said it had to remove 5.4 million pieces of content depicting child nudity or sexual exploitation and that there were 33.6 million takedowns of violent or graphic content.

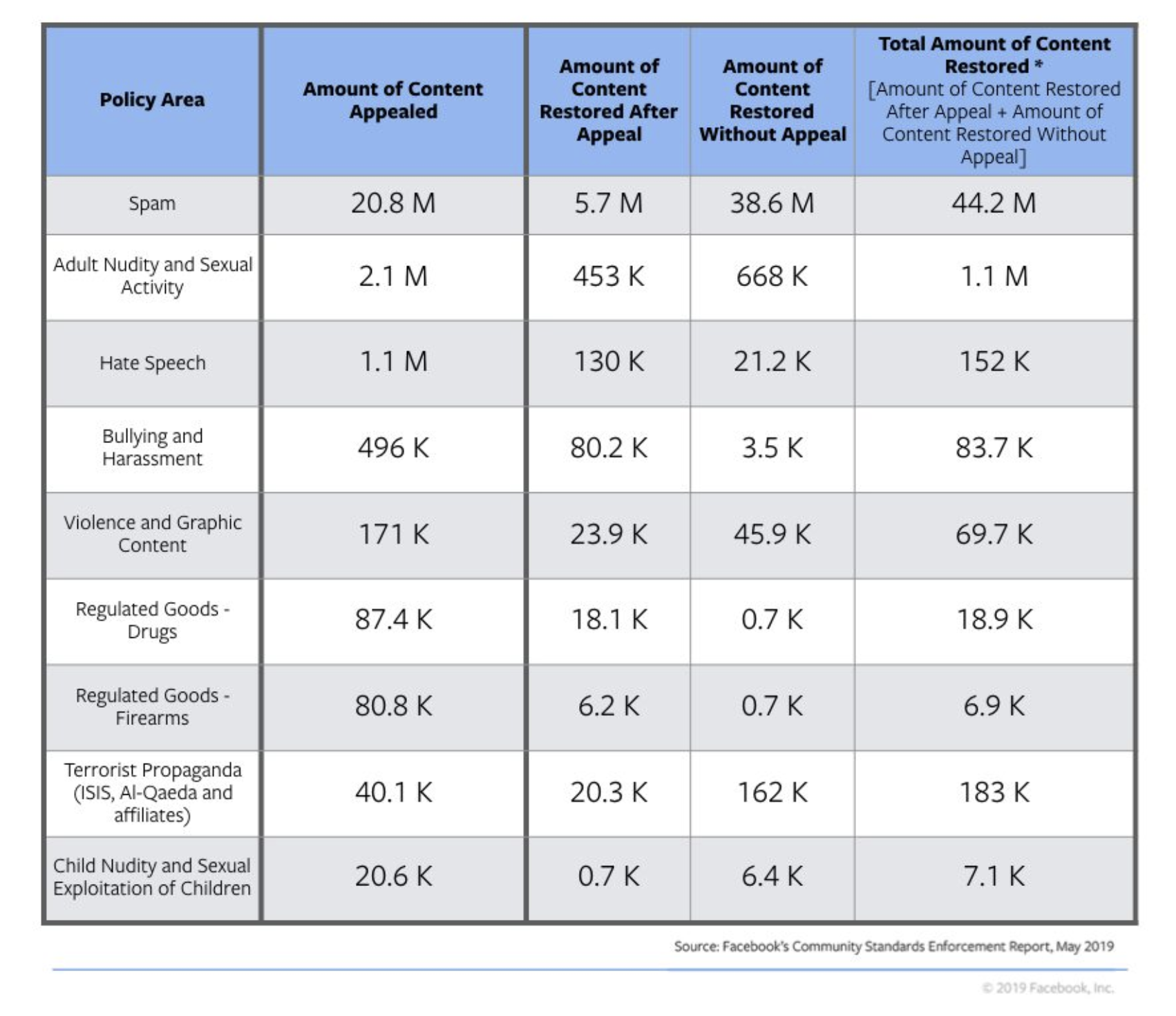

Interestingly, the areas where Facebook is the weakest on internal moderation are also the places where the company is least likely to reverse a decision on content removal. Although posts containing hate speech are among the most appealed types of content, they’re the least likely to be restored. Facebook reversed itself 152,000 times out of the 1.1 million appeals it heard related to hate speech. Other areas where the company seemed immune to argument was with posts related to the sale of regulated goods like guns and drugs.

In a further attempt to bolster its credibility and transparency, the company also released a summary of findings from an independent panel designed to give feedback on Facebook’s reporting and community guidelines themselves.

Facebook summarized the findings from the 44-page report by saying the commission validated Facebook’s approach to content moderation was appropriate and its audits well-designed “if executed as described.”

The group also recommended that Facebook develop more transparent processes and greater input for users into community guidelines policy development.

Recommendations also called for Facebook to incorporate more of the reporting metrics used by law enforcement when tracking crime.

“Law enforcement looks at how many people were the victims of crime — but they also look at how many criminal events law enforcement became aware of, how many crimes may have been committed without law enforcement knowing and how many people committed crimes,” according to a blog post from Facebook’s Radha Iyengar Plumb, head of Product Policy Research. “The group recommends that we provide additional metrics like these, while still noting that our current measurements and methodology are sound.”

Finally the report recommended a number of steps for Facebook to improve, which the company summarized below:

- Additional metrics we could provide that show our efforts to enforce our polices such as the accuracy of our enforcement and how often people disagree with our decisions

- Further break-downs of the metrics we already provide, such as the prevalence of certain types of violations in particular areas of the world, or how much content we removed versus apply a warning screen to when we include it in our content actioned metric

- Ways to make it easier for people who use Facebook to stay updated on changes we make to our policies and to have a greater voice in what content violates our policies and what doesn’t

Meanwhile, examples of what regulation might look like to ensure that Facebook is taking the right steps in a way that is accountable to the countries in which it operates are beginning to proliferate.

It’s hard to moderate a social network that’s larger than the world’s most populous countries, but accountability and transparency are critical to preventing the problems that exist on those networks from putting down permanent, physical roots in the countries where Facebook operates.

-

Entertainment7 days ago

Entertainment7 days agoEarth’s mini moon could be a chunk of the big moon, scientists say

-

Entertainment6 days ago

Entertainment6 days ago‘Dune: Prophecy’ review: The Bene Gesserit shine in this sci-fi showstopper

-

Entertainment5 days ago

Entertainment5 days agoBlack Friday 2024: The greatest early deals in Australia – live now

-

Entertainment4 days ago

Entertainment4 days agoHow to watch ‘Smile 2’ at home: When is it streaming?

-

Entertainment4 days ago

Entertainment4 days ago‘Wicked’ review: Ariana Grande and Cynthia Erivo aspire to movie musical magic

-

Entertainment3 days ago

Entertainment3 days agoA24 is selling chocolate now. But what would their films actually taste like?

-

Entertainment3 days ago

Entertainment3 days agoNew teen video-viewing guidelines: What you should know

-

Entertainment2 days ago

Entertainment2 days agoGreatest Amazon Black Friday deals: Early savings on Fire TVs, robot vacuums, and MacBooks